Time for a New Project

A couple weeks ago, Hubway, Boston’s bike share service, announced their 2017 Data Challenge. For the challenge, Hubway is providing trip data for previous years, station data, as well access to real time data. Those who enter the challenge will build a wide variety of visualizations and analysis. I think I might participate, so I downloaded the detailed month-by-month data for 2015 and 2016, as well as the station data and started to experiment. This post will outline some of my early work before I actually figure out what I will (might) do for the challenge.

For those interested, submissions are due on April 10, 2017.

Making the Data Usable

Hubway provided the data in the only format that matters, csv files. Since I don’t do much with text files (I am a database person), I wrote a few PostgreSQL scripts to wrangle the data from csv files into PostgreSQL.

The first script to I wrote was a loading script – Hubway2017_loading.sql. The script is pretty simple. and does the following:

- Build the tables – the schema is pretty straight forward

- Load data into staging tables

- Check for ‘bad values’ in each column – values that don’t meet the data type – they used a ‘\N’ for null. Make sure you check for that.

- Load data into the final tables – I have each year in seperate tables.

- Build geometry values for geographic analysis and visualization

The second set of scripts I wrote are analysis scripts. To start with the data analysis, I wrote three simple analysis scripts:

Feel free to check out my github page for this project and grab whatever code you like. I anticipate I will be adding more to this project over the next couple weeks.

Starting the Visualization

At the end of the Hubway2017_loading.sql script, I loaded the station data into its own table. With that data, I created a GeoJSON file of the stations with the reported capacity value with QGIS. I am using the GeoJSON format for a couple reasons. It works more seamlessly with CARTO, and it can properly store a date value (something shapefiles don’t do well).

I have uploaded the dataset to my hubway github here.

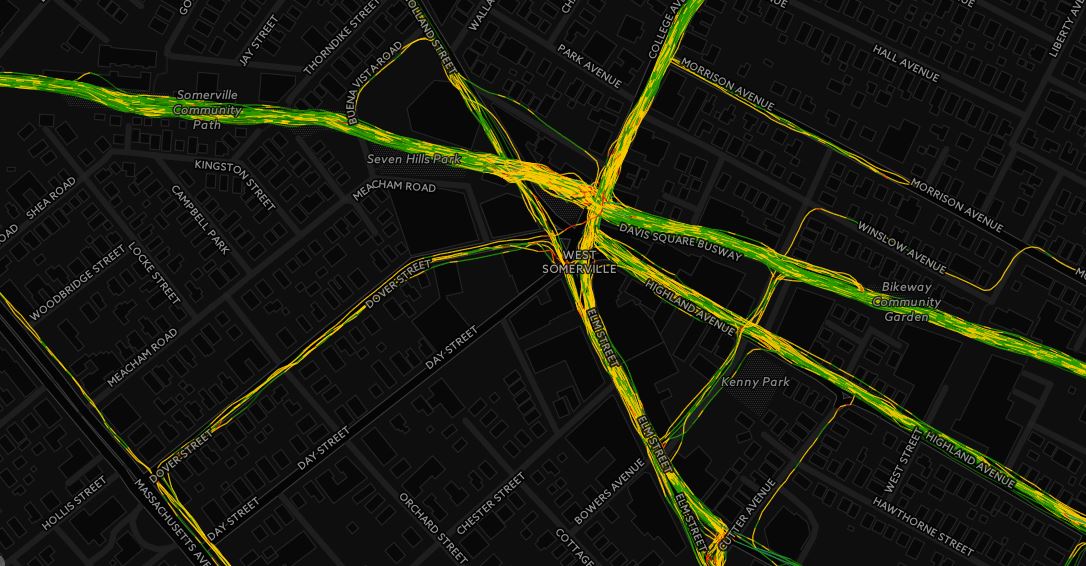

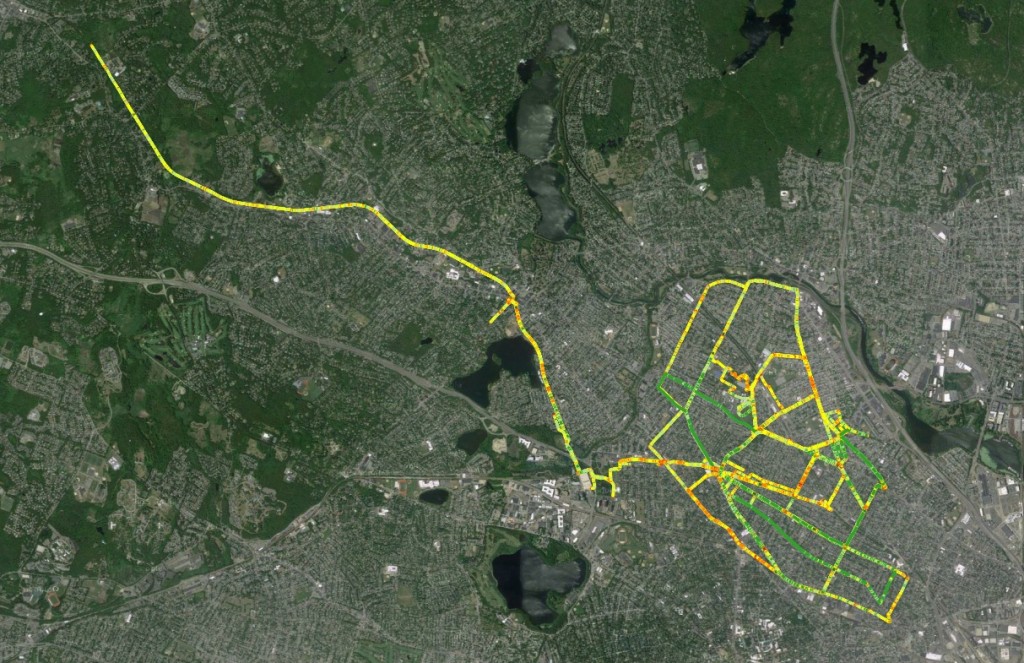

For anyone who knows Boston/Somerville/Cambridge/Brookline, this pattern of stations will make sense. The stations with lots of capacity stand out near South Station, MIT, and Mass General. There are 187 stations in this dataset, however, I need to double check to make sure the stations that appear in the map below were actually in use during 2015/2016, as stations aren’t necessarily permanent.

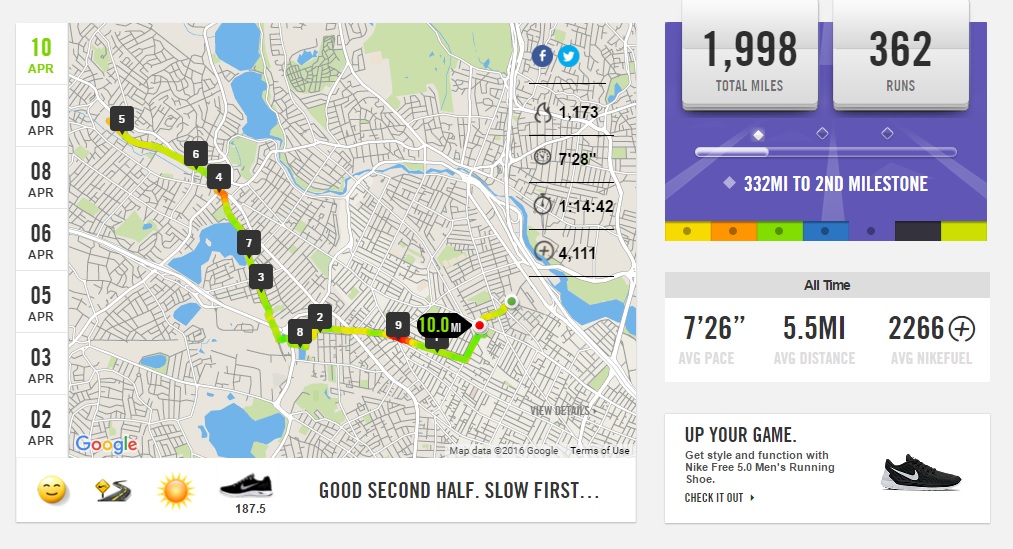

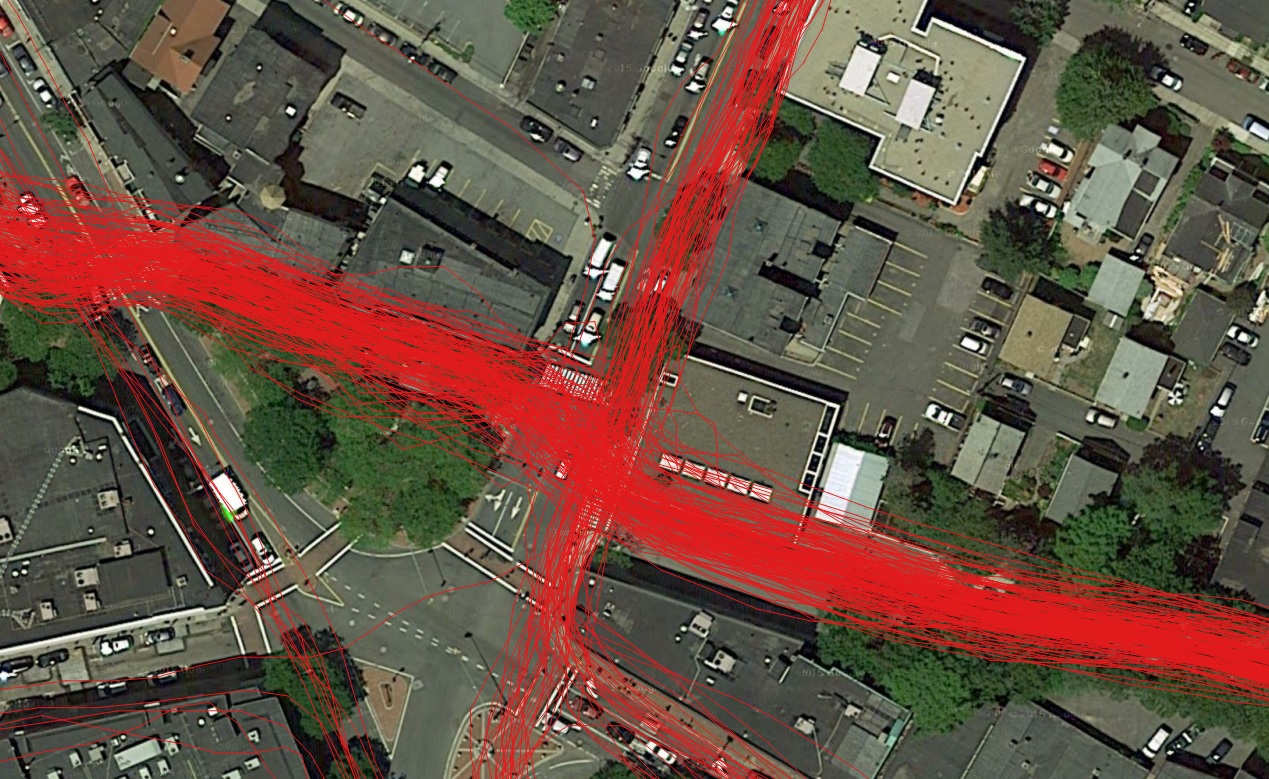

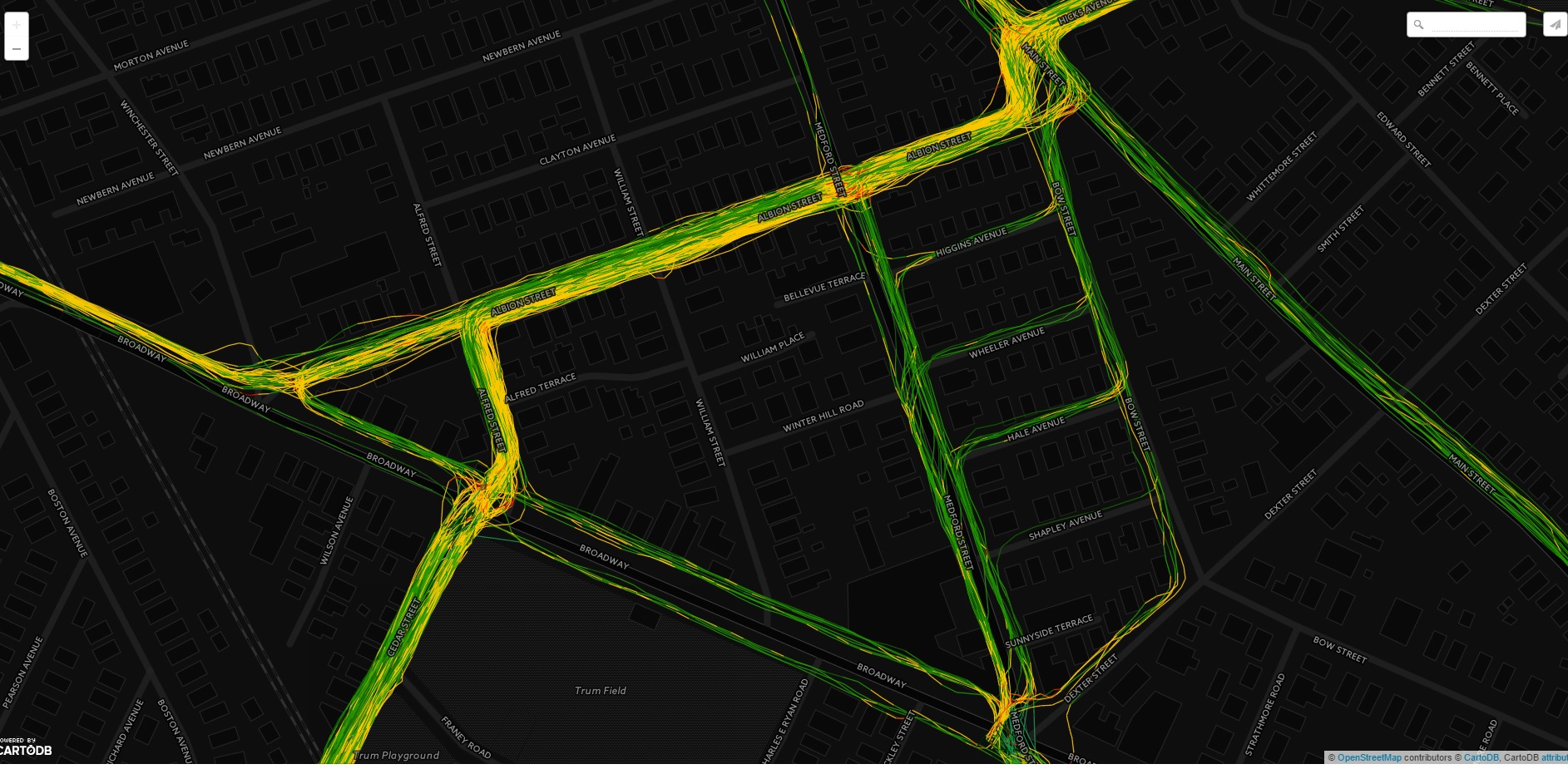

The next visualization I wanted to make was a time series map displaying the daily starts across the entire system for 2016. The first step was to build a table with all the relevant data. For those interested in the script, check out the OriginsByDay_Hubway2016.sql script. Once the script was run and the data created, I built a GeoJSON file in QGIS and uploaded it into CARTO. CARTO is a great online mapping service that is easy to use. If you are looking to make some maps for this challenge and don’t want to spend a lot of time leaning how to map or use mapping specific software, I encourage you to check out CARTO.

The following map steps through each day, visualizing the number of trip starts using CARTO’s Torque feature. It is fun to watch as the trip starts ebb and flow with the seasons. One can see stations come in and out of service across the city throughout the year, see major peaks and valleys in usage, and observe the strong relationship in trip starts between downtown Boston and the outlying stations.

Click here for the full size version (that works much better than the version limited by my wordpress CSS).

This simple visualization has given me a number of ideas on what to look into next including:

- Quantify the relationships between usage and weather

- The Giver and Taker stations – what is the net usage by station for each day

- Is that station at MIT really that busy every single day?

- Relationships between population density and usage

- Usage in regards to major days in the city, i.e., Marathon Monday, MIT/Harvard/BU move in days, college graduation days, Boston Calling, bad T days (for those who ride the T, you know what I mean).

There are some real patterns in this dataset and it will be fun to look into them and share the results.

Busiest Days in 2016

The last script I put together was to find the busiest days in regards to trip starts. The busiest day was August 9, 2016, with 6949 starts. This was a Tuesday, which blows my mind. I am shocked that the busiest day wasn’t a weekend day.

The rest of the busiest days all had over 6k starts and all happened between the end of June and the end of September. And again, all were on weekdays. This is really weird to me, as I tend to think that Hubway is used by tourists, and presumably, on weekends (especially downtown). Seeing that the busiest days are weekdays, is actually a real positive for the system, as it can be seen as a viable alternative transit option.

As you can see, there is still a ton to do. I need to get into this data some more and start to plan the story I want to tell. Also, I need to do some more QA on the data, so I fully understand what I am dealing with. The biggest part of any data analysis project isn’t the generation of fancy interactive graphics (which no one uses) or writing ground breaking algorithms; it is the dirty data work. Without checking and double checking the inputs, the analysis could be wrong, and no one wants that.