One of the great things about living in Boston is that there is a very active geo-community. Every few weeks there is something interesting happening, whether it is an AvidGeo meet-up, a geo-colloquium at one of the many schools in Boston, or an industry sponsored event.

One of those events happened this past Monday at Space with a Soul in Boston’s Innovation District, organized by Avid Geo and the Eclipse Foundation’s LocationTech group. The event focused on open source geo-based projects. The room was full of geo-thinkers from a variety of backgrounds, and thanks to a number of sponsors (AppGeo, Azavea, IBM, Actuate) there was plenty of food and beer! Added bonus, participants from the PostGIS code sprint were in town!

The lighting talk format – five minutes, 20 slides, auto advancing – works really well for these types of events. The speakers are energized and the crowd stays captivated. I tried in vain to keep up with Twitter during the event. Let’s take a look at my 140 character rundown of the evening.

Quick note – I missed a couple speaker’s names. If you know them please post a comment so I can update accordingly. UPDATE – I only need one more name!

Second quick note- Ignore the grammar mistakes in my tweets. I am a horrible with my thumbs.

Andrew Ross from LocationTech opened up the evening. He talked about the mission of LocationTech and explained how the Eclipse Foundation helps open source projects get off the ground and stay relevant.

Great crowd for #ignitelocationtech at Space with a Soul #boston #geo @avidgeo#locationtech twitter.com/GISDoctor/stat…

— Ben Spaulding (@GISDoctor) March 25, 2013

Max Uhlenhuth from SilviaTerra then gave a great overview how he started contributing to open source projects. Max had a number of great points but a couple really stuck with me. In his professional career he has only used open source software, and he was using FOSS in high school! When I was in high school my parents had just gotten this new thing called the “internet.” His best quote came about halfway through his talk:

“I wanted to contribute back to the open source community so I didn’t feel like a leech” #ignitelocationtech #foss #geo #Boston

— Ben Spaulding (@GISDoctor) March 25, 2013

Michael Evans and his colleague whose name escapes me (if you know, please let me know so I can update this post) talked about ongoing efforts in Boston’s City Hall to improve data sharing, analysis and visualization. They talked about the extremely popular blizzard reporting site that famously crashed and how it was both a success and a failure. It was a success because it was so popular, and it was a failure because it crashed, and crashed hard. They also talked about efforts to make data resources work with more efficiency within City Hall.

100000s of visitors within an hour- “it was really freaking popular…” 2013 #Boston blizzard reporting site #ignitelocationtech #geo

— Ben Spaulding (@GISDoctor) March 25, 2013

I’ve seen Jeffrey Warren from the Public Laboratory give talks a few times over the past couple years and he and his colleagues are always doing something innovative. He didn’t disappoint during his talk on Monday. He talked about the Public Laboratory’s open source spectrometer. It was pretty amazing. I wish he had another 25 minutes to go into more detail.

Another great talk from Jeffrey Warren from public laboratory – open source spectrometers #awesome #ignitelocationtech

— Ben Spaulding (@GISDoctor) March 25, 2013

Leaflet made an appearance at the event as well. Calvin Metcalf, leaflet guru and cat enthusiast, gave a talk titled –Leaflet for some cats. Using Max Ogden’s Javascript for Cats for inspiration, Calvin went through a few examples of how easy Leaflet is to use and customize. If you haven’t tried Leaflet yet you should. Calvin’s slides can be found here.

@cwmma – #leaflet for cats! It is that easy! #ignitelocationtech #opengeo #Boston twitter.com/GISDoctor/stat…

— Ben Spaulding (@GISDoctor) March 25, 2013

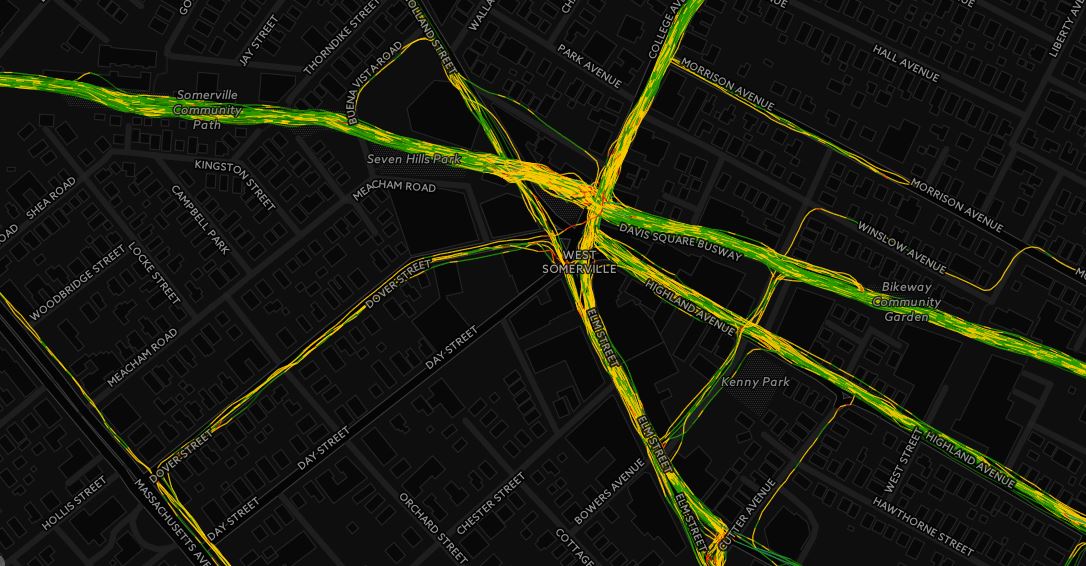

Christian Sparning from the Metropolitan Area Planning Council talked about the Hubway’s visualization hackathon. If you are in the Boston area you have probably seen some of the results of this event over the past several months. The Hubway folks released a whole bunch of data – ride numbers, origin/destination data, temporal data – and then held an hackathon. Christian talked about the variety of people who participated and the variety of creative ways they analyzed and visualized the data.

#HubwayMapping hackathon – terrific analysis and visualization @cspanring #ignitelocationtech #Boston @avidgeo

— Ben Spaulding (@GISDoctor) March 26, 2013

UPDATE: Thanks to Andrew Ross I got an update on the evening’s last speaker (I didn’t catch his name at first). The last talk was from Ken Walker from Eclipse, talking about the Orion Editor. I’m not too familiar with Orion, which is a browser-based tool for developing on and for the web, but it looked like something I should learn about, soon. I encourage you to check out the link to learn more. Ken was kind enough to post his slides as well.

Overall, this was a great event. Avid Geo and LocationTech did a great job putting this together and the speakers inspired those in the audience. I really think you are seeing the future of geo at events like this. Geo is no longer just for technicians working in municipal offices. Geo is moving beyond GIS, web mapping, and cartography. Geo is now everywhere and anywhere and that is a great thing. In the near future geo will be even more ubiquitous throughout the business and technology worlds and there will be a growing demand for people trained in the geospatial sciences. Events like this keep the field moving forward!